I never imagined an app could understand me better than some of the therapists I’d seen. But one night, overwhelmed by anxiety and unable to sleep, I opened a chatbot I’d downloaded on a whim. Within minutes, it wasn’t just asking random questions — it was guiding me through a reflection I didn’t know I needed. The way it listened, responded, and gently nudged me toward clarity felt strangely human. That unexpected moment became my first real experience with AI mental health tools, and honestly, it reshaped everything I thought I knew about emotional care.

Before that night, I’d always associated therapy with physical offices, booked appointments, and waiting rooms filled with outdated magazines. But here I was, curled up in bed, speaking to an AI-driven app that made me feel heard — really heard. These AI mental health tools aren’t perfect, but they are surprisingly intuitive. They use machine learning to adapt to your emotional patterns, and many now include mood tracking, journaling, and CBT-style coaching. From that night on, I began integrating AI mental health tools into my daily life. They became a quiet support system — always available, never judgmental, and surprisingly effective at moments when I needed them most.

Table of Contents

- What Are AI Mental Health Tools?

- Why More People Are Turning to AI for Mental Health Support

- Top AI-Powered Mental Health Tools in 2025

- Benefits of Using AI for Emotional Support

- Limitations and Ethical Considerations

- Real User Stories: How AI Tools Are Making a Difference

- What’s Next? The Future of AI in Mental Health

- Frequently Asked Questions

- Join the Conversation

What Are AI Mental Health Tools?

AI mental health tools are digital solutions powered by artificial intelligence, designed to support emotional and psychological wellbeing in smart, adaptive ways. These tools use advanced technologies like natural language processing (NLP), machine learning, and real-time sentiment analysis to understand users and respond empathetically. In essence, AI mental health tools aim to replicate — or at least complement — the therapeutic process, offering guidance, emotional tracking, and even early intervention based on behavioral data.

For example, chatbots like Woebot use principles from cognitive behavioral therapy (CBT) to help users challenge negative thoughts in real time. On the other hand, Wysa combines AI-driven conversations with access to human mental health coaches for deeper support. Meanwhile, other AI mental health tools sync with wearables to track physiological changes like heart rate and sleep patterns, providing a fuller picture of a user’s mental state.

In reality, AI mental health tools are not limited to apps — they represent a growing ecosystem of digital mental health support that continues to evolve rapidly. As technology becomes more personalized and accessible, these tools are breaking barriers for people who may not otherwise seek help.

Why More People Are Turning to AI Mental Health Tools for Support

In today’s fast-paced world, people need emotional support that’s not only effective but also immediate, judgment-free, and affordable. That’s exactly why AI mental health tools are gaining enormous traction. These technologies are reshaping psychological care by providing solutions that suit modern lifestyles where stress, anxiety, and burnout are increasingly common.

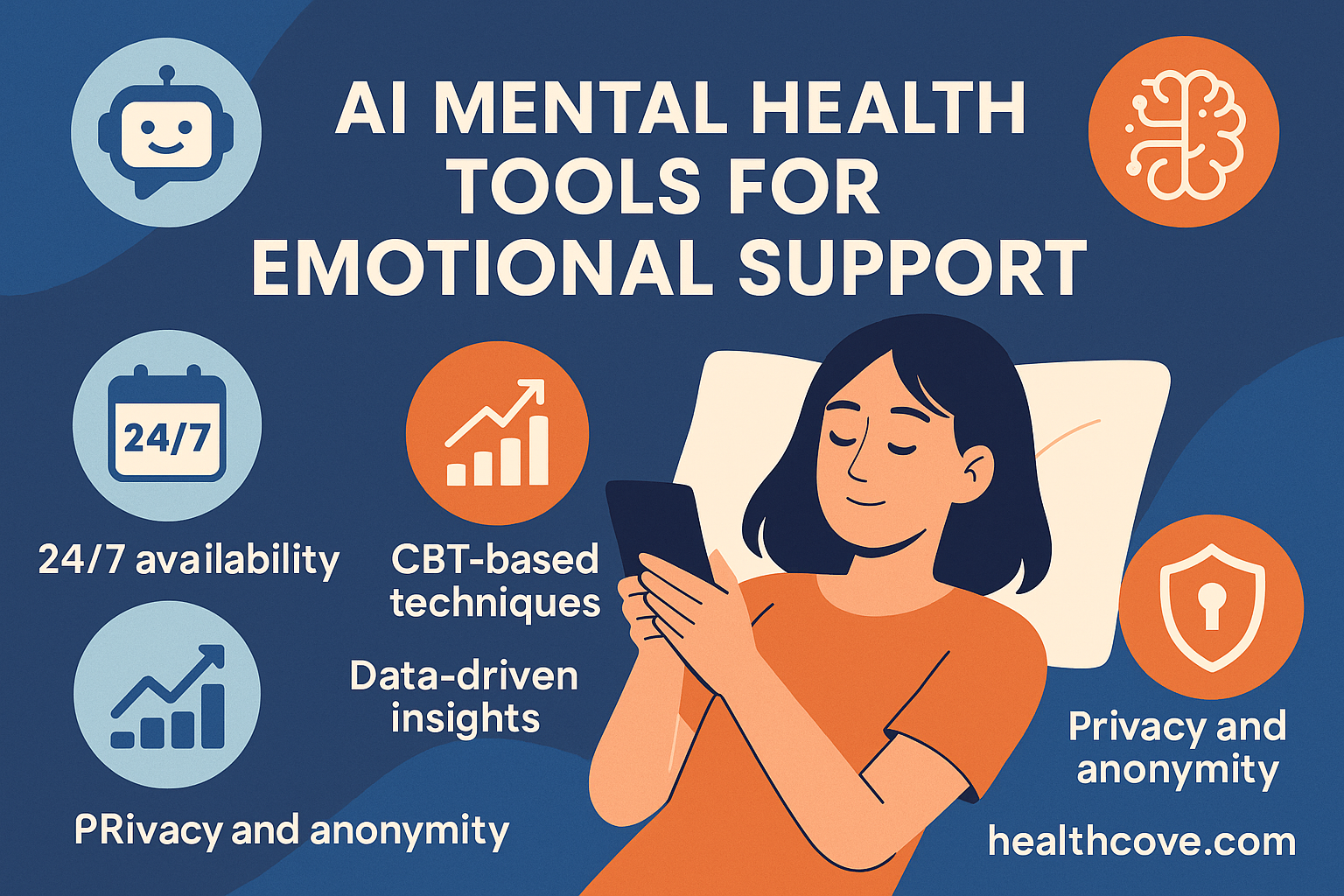

- 24/7 availability: Unlike traditional therapy sessions that require scheduling and travel, these apps are accessible any time — day or night — from the comfort of home. Whether it’s 2 a.m. and you can’t sleep or you need a quick check-in during your lunch break, support is just a tap away.

- Low cost: Many platforms offer free versions with powerful core features, while premium plans remain far more affordable than traditional therapy. This opens the door to support for students, freelancers, or low-income individuals who might otherwise forgo care.

- Privacy and anonymity: For those hesitant to open up face-to-face, especially in small communities, digital tools provide a nonjudgmental space to speak freely. The assurance of anonymity often encourages users to express deeper, more vulnerable thoughts.

- Data-driven insights: These systems often include built-in mood trackers, journaling, and behavioral feedback powered by AI. Over time, users can identify emotional patterns and triggers — leading to improved self-awareness and early action.

From another angle, innovations in this space are also improving access in underserved areas. Rural communities, individuals with disabilities, or those facing cultural stigma can now receive consistent emotional support that previously felt out of reach.

Top AI Mental Health Tools You Should Try in 2025

Below are some of the most effective and widely used AI mental health tools making a real difference in 2025:

1. Woebot – The CBT-Based Chatbot That Feels Human

Among AI mental health tools, Woebot stands out for its psychological depth. Built on cognitive behavioral therapy (CBT), it engages users in daily emotional check-ins. The conversations feel natural, often surprising users with their relevance. According to clinical research, Woebot has helped reduce depressive symptoms in as little as two weeks. It’s like texting a therapist — but instantly, without judgment.

2. Wysa – Blending AI Chat With Real Human Coaching

Wysa combines AI intelligence with certified coaches, making it one of the most holistic AI mental health tools available today. Its strength lies in managing anxiety and emotional fatigue. Over 5 million people use Wysa worldwide. Whether you want to talk to an AI or escalate to human help, Wysa adapts seamlessly to your needs.

3. Youper – The Pocket Therapist for Mood and ADHD

Youper is a rising star among AI mental health tools, known for its smart emotional guidance. It uses artificial intelligence to guide users through brief therapeutic exercises and mood reflections. It’s especially effective for those managing anxiety, mood swings, or ADHD. Journaling, mood tracking, and even symptom analysis happen in one intuitive interface.

4. Mindstrong – Predictive AI for Early Mental Health Alerts

Mindstrong is a clinical-grade tool that tracks how you type, scroll, and interact with your phone to detect early signs of emotional distress. As one of the most advanced AI mental health tools, it bridges daily behavior with predictive mental health analytics. It’s particularly useful for people at risk of relapse or mood shifts.

5. Replika – Your AI Friend When You Need to Talk

Replika isn’t a therapeutic app in the strict sense, but many consider it one of the most comforting AI mental health tools. It offers companionship through deep, customizable conversations. Users can talk about their day, their worries, or even explore identity questions. For those feeling isolated or emotionally distant, Replika can be a helpful emotional anchor.

Still curious? Discover more AI mental health tools in our full Mental Health Tools Guide.

Benefits of Using AI Mental Health Tools for Emotional Support

In reality, AI mental health tools aren’t designed to replace therapists — they’re here to enhance emotional care, especially between sessions. These tools bring a new dimension of accessibility, adaptability, and consistency that traditional therapy can’t always match. Let’s explore some of the most powerful benefits:

- Scalability: One of the biggest advantages of AI mental health tools is their ability to support thousands of users simultaneously. Whether someone’s struggling at 3 a.m. or during a lunch break at work, help is just a tap away. This scalable access is especially valuable in countries with limited mental health professionals.

- Consistency: Unlike humans, AI mental health tools don’t get tired, distracted, or emotionally overwhelmed. The experience remains stable and judgment-free, regardless of how many times a user checks in. This consistency builds trust and encourages users to open up more frequently.

- Customization: Over time, these AI mental health tools adapt to users’ behaviors, language patterns, and emotional rhythms. Some platforms even personalize responses based on journaling history or mood tracking, creating a deeply personal support experience tailored to each individual.

- Early intervention: Many advanced AI mental health tools are equipped with algorithms that detect signs of distress before the user even notices. By analyzing typing speed, word choice, or sentiment, they can prompt proactive support — helping to prevent emotional spirals before they deepen.

Limitations and Ethical Concerns of AI Mental Health Tools

While AI mental health tools offer impressive support options, it’s essential to address the limitations and ethical challenges they still face. As adoption increases, users and developers must remain mindful of potential risks tied to data security, fairness, emotional depth, and crisis readiness. Here are some of the most pressing concerns to consider:

1. Privacy Risks in AI-Powered Emotional Tools

Data security is a major concern. Many platforms collect highly sensitive information — such as journal entries, mood patterns, and emotional reflections. Are these details encrypted? Where is your data stored? Users deserve full transparency, strong privacy controls, and assurances against unauthorized access or breaches.

2. Hidden Biases Within AI Support Platforms

Algorithms are shaped by the data they learn from. If that data lacks diversity, results can be biased or even harmful. This poses a real risk for people from underrepresented communities. Developers must test their systems across cultures and identities to ensure fair and inclusive responses.

3. Empathy Gaps in Digital Support

Even the smartest systems cannot replicate the warmth of human connection. While chatbots can simulate supportive dialogue, they don’t actually feel or understand pain. For individuals healing from trauma or navigating deep emotional issues, this emotional void may feel discouraging or unhelpful.

4. Not a Replacement for Crisis Support

These platforms are not equipped to manage emergencies. If someone is in immediate danger or experiencing a mental health crisis, they need human intervention — not automated messages. It’s crucial that tools include emergency referral options and clear disclaimers about their limits.

5. The Urgent Need for Ethical Oversight

Institutions like the WHO stress the importance of ethical frameworks in digital healthcare. As AI therapy evolves, strong regulations are needed to ensure safety, transparency, and respect for human dignity in all forms of mental health support.

Real User Stories: How AI Mental Health Tools Are Making a Difference

Maria, 29 – Finding Support in Rural Isolation

“I live in a rural area with no therapists nearby. Woebot became my daily check-in, helping me cope after a breakup.”

Maria’s story highlights how digital wellness platforms can bridge care gaps in underserved communities. With no mental health services nearby, she turned to Woebot for consistent support. Its friendly check-ins helped her track emotions, reflect on her healing, and build resilience — especially during moments of vulnerability. For her, the chatbot became a lifeline when human therapy wasn’t an option.

James, 34 – Managing ADHD Through Digital Emotional Support

“As someone with ADHD, I use Youper to monitor my mood swings and stay accountable. It feels like having a smart coach in my pocket.”

For James, emotional regulation has always been difficult. With Youper, he found a structured way to understand mood shifts and stay focused. Its use of CBT-style techniques and daily mood tracking helped him take control of his routines. This kind of personalized emotional technology offers him the stability he once struggled to achieve — without overwhelming him.

Nicole, 42 – Staying Grounded While Constantly Traveling

“I travel a lot for work. Replika has been a comforting presence — not perfect, but it listens when I need to vent.”

Between flights and hotel rooms, Nicole needed a source of connection she could access anytime. Replika became that quiet companion — offering someone to “talk” to when no one else was available. Though not a licensed therapist, it provided emotional check-ins and conversations that helped her decompress from the stress of a transient lifestyle. In her words, it wasn’t perfect, but it was enough to make her feel heard when it mattered most.

What’s Next? The Future of AI Mental Health Tools

The Rise of Wearables and Biometric Integration

The next generation of emotional support will merge artificial intelligence with wearable devices and biometric feedback. Rather than relying solely on user input, mental health apps may soon respond to physiological signals. Imagine a smart ring detecting stress through heart rate changes and instantly launching a calming visualization on your smartwatch — no need to even ask for help.

Immersive Therapy Through Virtual Reality

Innovators are also experimenting with AI-enhanced virtual reality. Instead of chatting with a bot, users could explore digital landscapes crafted to suit their emotional state. Picture walking through a peaceful forest generated by AI based on your mood. These immersive experiences are being explored for trauma recovery, anxiety reduction, and guided meditation — adapting in real-time to your mental needs.

Smarter Conversations and Global Access

One of the most transformative developments lies in language processing. Advanced systems are learning to detect signs of emotional distress or suicidal thinking through subtle language cues. At the same time, multilingual support is expanding rapidly, making tech-based support more inclusive than ever. People across the globe will soon access mental wellness tools in their native languages, overcoming cultural and linguistic barriers that have limited traditional therapy for years.

Curious about what’s already available? Check out our guide to the Top Mental Wellness Tools that reflect these innovations.

Frequently Asked Questions About AI Mental Health Tools

Are AI mental health tools safe?

Most of these digital platforms follow strict security protocols like encryption and anonymized data handling. Still, it’s important to choose services developed by reputable teams. Always read the privacy policy carefully, and opt for tools that clearly explain how your emotional data is collected, stored, and used.

Can they replace traditional therapy?

Not really. These apps are great for support between sessions or for people not ready to speak with a therapist. However, they can’t match the depth and emotional nuance of face-to-face counseling. Think of them as helpful companions, not full replacements.

Are there any free emotional support apps powered by AI?

Yes, several popular options offer solid free versions. Tools like Woebot, Wysa, and Replika provide core emotional wellness features without cost. Premium upgrades unlock more features like coaching or advanced analytics, but the free tiers often meet basic mental health needs effectively.

Can these apps help in a mental health emergency?

No, they’re not designed for crises. If you’re in immediate danger or having severe distress, call emergency services or a crisis line like 988 (US). While some platforms may offer referral links, they can’t replace human emergency care.

Join the Conversation

Have you tried any AI mental health tools? What was your experience like? Share your story in the comments below, or subscribe to our newsletter for more insights on digital mental health innovations. Let’s explore the future of emotional care — together.

Related articles you might enjoy: